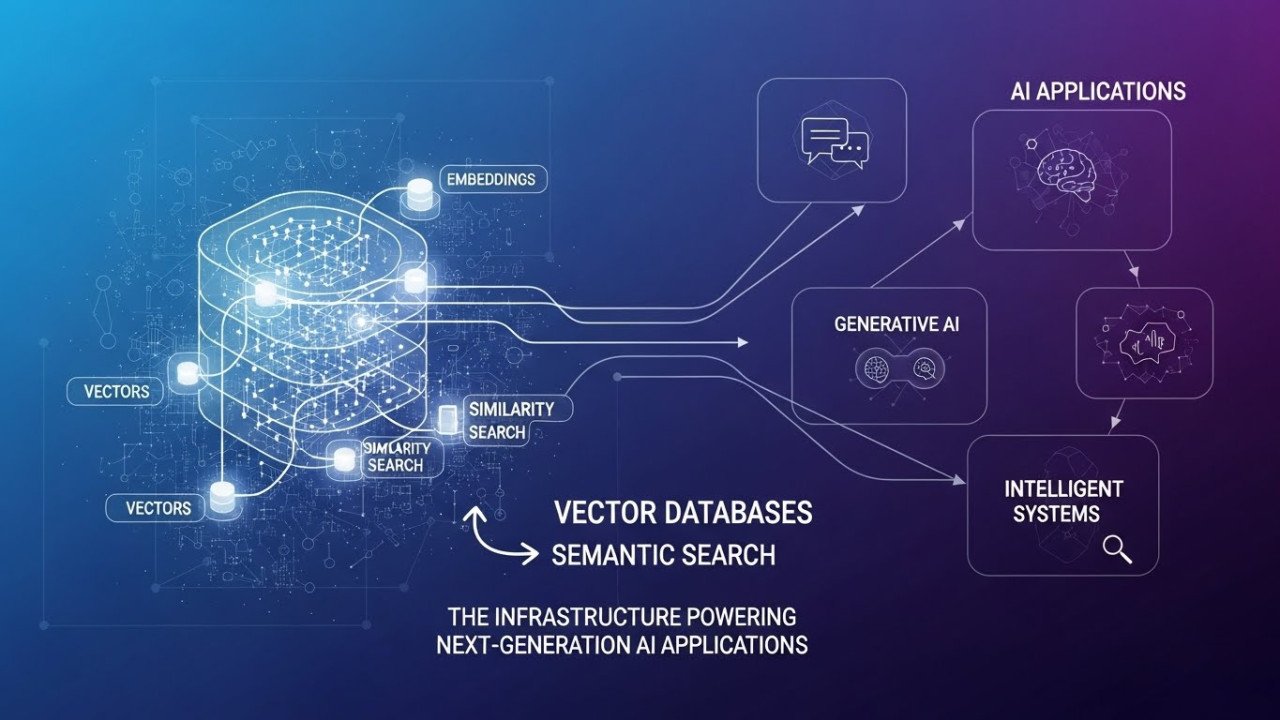

Vector Databases and Semantic Search: The Infrastructure Powering Next-Generation AI Applications

The Search Revolution: From Keywords to Meaning

Traditional search engines match keywords. Semantic search matches meaning. This distinction powers modern AI applications. When you search "how to handle webhook failures," a keyword system finds pages containing those exact words. A semantic search system finds pages about error handling, retry logic, and event-driven architecture—conceptually similar even if terminology differs. Vector databases make semantic search possible by storing data as numerical vectors representing meaning, not keywords. This shift unlocked retrieval-augmented generation (RAG), which powers the majority of production AI applications built in 2025.

The infrastructure matured rapidly. In 2023, vector databases were experimental tools. By 2025, they're mission-critical: Notion uses vector search for document retrieval, Stripe uses it for API documentation discovery, legal firms use it to find precedent cases by conceptual similarity, and e-commerce platforms use it for visual product search. The market expanded to $1.2 billion annually with 15+ production-ready platforms competing. The question shifted from "Should we use vector databases?" to "Which one? Self-hosted or managed? At what cost?"

How Vector Databases Work: The Technical Foundation

Embeddings: Converting Text to Meaning

Vector databases don't store text directly. They store embeddings—numerical representations where semantic meaning becomes geometric distance. A sentence becomes a 768 or 1,536-dimensional vector. Sentences with similar meaning have vectors pointing in similar directions. A query becomes a vector. The database finds vectors closest to the query vector (nearest neighbors), returning the most semantically similar results.

Embedding quality determines search quality. OpenAI's text-embedding-3-large (1,536 dimensions) captures nuance precisely but consumes more compute and storage. Google's gecko model (768 dimensions) balances quality and efficiency. Smaller embeddings (256 dimensions) offer speed but lose semantic resolution. The trade-off: 512-dimensional vectors provide industry-standard balance between accuracy and cost for most RAG applications.

Approximate Nearest Neighbor Search

Finding exact nearest neighbors across billions of vectors is computationally impossible at scale. Vector databases use approximate nearest neighbor (ANN) algorithms that sacrifice tiny accuracy losses for massive speed gains. Searching 1 billion 768-dimensional vectors for nearest neighbors takes 5-20 minutes with exact algorithms. ANN algorithms complete the same search in 30-100 milliseconds—orders of magnitude faster.

The cost: ANN returns 99.5% of what an exact search would return. In practice, this is imperceptible. Users get the top 10 results. Exact and approximate methods return identical top 5 results 99%+ of the time. Speed matters more than exhaustive precision for production systems.

Indexing Strategies and Trade-Offs

Vector databases use multiple indexing approaches optimized for different scenarios. HNSW (Hierarchical Navigable Small World) indexes provide fast search with moderate memory overhead—the default for most databases. IVF (Inverted File) indexes reduce memory consumption by 50-70% but search more slowly. DiskANN indexes offload vectors to disk, enabling billion-scale storage on modest hardware but adding disk I/O latency. Smart databases auto-select indexing based on dataset size and query patterns.

Vector Database Comparison: Performance and Cost

Managed Services vs. Self-Hosted

Pinecone (Managed): Query latency 40-50ms p95, throughput 5,000-10,000 queries/second, starting price $840/month. Easiest deployment—5-line setup, fully managed infrastructure, auto-scaling. Cost at 10M vectors: ~$1,300/year. No infrastructure overhead. Vendor lock-in is the trade-off.

Milvus (Self-Hosted Open-Source): Sub-50ms query latency with proper configuration, throughput 10,000-20,000 queries/second (higher than Pinecone), zero licensing cost. Catch: requires Kubernetes expertise, 4-8 weeks of deployment time, and ongoing maintenance. Infrastructure cost at 10M vectors: $800-1,400/month ($9,600-16,800/year). Becomes cheaper than Pinecone at 50-80M vectors.

Weaviate (Cloud SaaS): Query latency 50-70ms p95, throughput 3,000-8,000 queries/second, pricing $595-950/month depending on tier. The middle ground between managed simplicity and self-hosted cost. Auto-scaling included.

Qdrant (Self-Hosted or Cloud): Query latency 30-40ms (fastest for ANN search), throughput 8,000-15,000 queries/second. Cloud pricing $240/month base tier. Excellent performance-to-cost ratio for medium-scale deployments (5-50M vectors).

Break-Even Analysis: When Self-Hosting Wins

Pinecone: ~$13,000/year for 10M vectors. Qdrant self-hosted: ~$12,000/year (infrastructure) + one-time $2,400-6,000 setup. Break-even: 12-18 months. At 50M vectors, Pinecone costs $65,000+/year. Qdrant self-hosted costs $48,000-60,000/year, including personnel overhead. Self-hosting wins decisively at scale.

For 10-30M vectors (typical mid-market), managed services make sense. For 50M+ vectors (enterprise scale), self-hosted deployment becomes economically dominant. The trade-off is operational complexity—self-hosted requires DevOps expertise. Managed services require accepting vendor lock-in and premium pricing.

Hybrid Search: The Production Secret Weapon

Semantic search alone misses important patterns. A query for "zero balance accounts" in a banking system should match exact phrases like "zero balance" precisely. Pure semantic search might retrieve "low-balance warning systems" as similar, —not wrong semantically, but wrong contextually. Hybrid search combines semantic search (dense vectors) with keyword search (sparse vectors, BM25 algorithm), weighting each approach based on query type.

When Each Approach Wins

Pure semantic search wins: Natural language questions, conceptual discovery, synonym handling. "How do I improve customer retention?" finds articles on churn reduction, engagement strategies, loyalty programs, and even without exact keyword matches.

Pure keyword search wins: Exact phrase matching, rare technical terms, product SKUs, and account numbers. "SKU-12345XYZ" should match exactly, not semantically.

Hybrid wins: Mixed queries combining both needs. "Find documentation on error handling using exponential backoff strategy" benefits from keyword precision on "exponential backoff" combined with semantic understanding that "error handling" relates to retry logic and failure recovery.

Implementation: Reciprocal Rank Fusion

Hybrid search generates two result sets—one from keyword search (BM25), one from semantic search (vector similarity). Reciprocal Rank Fusion combines them by assigning scores based on ranking position. If a document ranks #2 in keyword results and #5 in semantic results, it receives a composite score balancing both. The algorithm requires no manual tuning of weights.

Real-world results: Hybrid search achieves 15-25% higher precision than pure semantic search and 30-40% higher recall than pure keyword search. For enterprise search applications, the improvement justifies the added complexity.

Embeddings: Choosing the Right Model

Dense Embeddings for General RAG

OpenAI text-embedding-3-small (512 dimensions): Strong semantic understanding, multilingual support, $0.02 per 1M tokens. Industry-standard for general-purpose RAG. Recommended for 90% of applications.

OpenAI text-embedding-3-large (1,536 dimensions): Superior semantic precision, best for complex technical domains, $0.13 per 1M tokens (6.5x cost increase). Use only when the small model performance is insufficient.

Cohere Embed v3 (1,024 dimensions): Strong long-context support (up to 32K tokens), 100+ language support, competitive pricing. Good alternative to OpenAI for multilingual requirements.

Open-source models (E5, BGE, BERT-based): No API costs, complete privacy (on-premises deployment possible), 768-1024 dimensions. Performance lags commercial models by 5-15%, but cost savings and privacy control often justify the trade-off.

Multimodal Embeddings for Cross-Modal Search

CLIP and similar multimodal models represent text, images, and video in a shared vector space. Search for "red sunset over mountain" and retrieve images matching that description. Search for an image and find similar text descriptions. This unified embedding space powers visual commerce, content discovery, and accessibility applications. The compute cost is higher (50% more than text-only embeddings), but multimodal applications have no alternative.

Dimensionality Trade-Offs

256 dimensions: Lightning-fast search, minimal storage, significant information loss. Use only for real-time high-throughput applications where latency below 10ms is critical.

512 dimensions: Industry standard. Best balance between semantic quality (95%+ of 1,536-dim performance) and efficiency. Recommended default.

768 dimensions: Slightly better semantic capture, still efficient. Growing preference for enterprise applications.

1,024-1,536 dimensions: Highest semantic precision, 2-3x more storage and compute. Use only when domain complexity demands precision (scientific research, legal document retrieval).

RAG Applications: Where Vector Databases Create Value

Knowledge Management and Document Retrieval

Companies store terabytes of internal documents—wikis, policies, procedures, past decisions. Traditional search (keyword-based) fails when employees use different terminology than the original documents. Semantic search with vector databases enables employees to find relevant information in seconds, cutting research time from hours to minutes. Notion and Stripe implemented this at scale.

Customer Support Automation

Customer service queries are matched against a knowledge base of previous resolutions and FAQs. Semantic search finds relevant articles even when customer phrasing differs from standard documentation. "How do I fix my password?" matches the documentation titled "Account Credential Recovery" semantically. Support automation improved by 30-40% through better semantic matching of customer queries to solution documents.

Legal and Compliance Document Search

Law firms search millions of case documents, finding precedents by conceptual similarity. A case about "breach of fiduciary duty in merger transactions" finds similar cases about "director responsibility in M&A" without exact phrase matching. Search time compressed from hours to seconds. Accuracy improved because semantic search understands legal concepts, not just keyword overlap.

E-Commerce and Visual Discovery

Image search powered by multimodal embeddings. "Red dress with floral pattern" finds matching products. Search "show me similar products" to a selected item. Shopify's visual search and similar implementations improved conversion rates 15-25% by improving product discoverability through semantic and visual similarity.

Production Challenges and Solutions

Embedding Staleness and Update Complexity

Documents are added or modified continuously. New document embeddings must be generated and indexed. Real-time indexing adds latency to document creation. Batch indexing (daily or weekly updates) means search results temporarily miss recent documents. Most systems use hybrid approaches: immediate semantic indexing of new documents at lower priority (affecting search ranking slightly), with full re-indexing during off-hours.

Memory and Storage Costs at Scale

Storing 1 billion 1,536-dimensional vectors requires 6TB of disk space (if stored as float32) or 3TB compressed. Keeping all vectors in memory for fast search requires massive hardware. Production systems tier storage: hot vectors (recent, frequently accessed) kept in-memory, warm vectors (older, infrequently accessed) on disk, cold vectors (archival) in cloud storage with slower retrieval. Tiering reduces memory cost by 40-60% while maintaining performance on hot data.

Embedding Model Upgrades

When a better embedding model becomes available, all existing vectors must be re-embedded. Re-indexing 1 billion vectors takes weeks. Migrating between embedding models requires running both in parallel temporarily, testing equivalence, and then cutover. This is operationally expensive. Academic research in embedding conversion (converting vectors from one model space to another via learned transformation) may reduce this friction, but production systems today budget for periodic full re-indexing.

Cost Optimization Strategies

Dimension Reduction Without Accuracy Loss

OpenAI's 1,536-dimensional embeddings contain redundant information. Reducing to 768 dimensions through principal component analysis (PCA) or similar techniques cuts storage by 50% and search latency by 30-40% with recall loss under 1-2%. Most applications see no meaningful performance degradation. Cost savings compound quickly.

Tiered Storage Architecture

Hot data (last 30 days) in Pinecone with fast search: $1,000/month. Warm data (30-365 days) in self-hosted Qdrant on cost-optimized hardware: $500/month. Cold data (>1 year) in cloud object storage with slower retrieval: $50/month. Total cost for 100M vectors drops from $5,000+/month (all in Pinecone) to $1,500/month with tiering.

Embedding-as-a-Service vs. Self-Hosted Generation

OpenAI API costs $0.02 per 1M tokens (expensive at scale). Self-hosted embedding models (E5-small, open-source) have zero API cost, only compute cost. At 10M documents requiring re-embedding monthly, OpenAI costs ~$5,000/month. Self-hosted compute costs $500/month. Migration saves $54,000/year.

Key Takeaways

- Vector Databases Enable Semantic Search: Matching meaning, not keywords, transforms search from basic keyword matching to conceptual understanding. Performance gains: 15-25% higher relevance scores.

- Managed vs. Self-Hosted Trade-Off Is Clear: Pinecone: $1,300/year for 10M vectors, fully managed. Milvus: $9,600-16,800/year for 10M vectors, requires infrastructure expertise. Break-even at 50-80M vectors favors self-hosting financially.

- Latency Performance Is Benchmark-Dependent: Pinecone: 40-50ms p95. Milvus: sub-50ms with proper tuning. Qdrant: 30-40ms (fastest). Weaviate: 50-70ms. Difference matters for user-facing applications, less so for batch processing.

- Hybrid Search (Semantic + Keyword) Outperforms Either Alone: Hybrid achieves 30-40% higher recall than pure keyword search and 15-25% higher precision than pure semantic search. Reciprocal Rank Fusion combines results without manual tuning.

- Embedding Dimensionality Is a Tuning Parameter: 512 dimensions = industry standard. 256 = ultra-fast for high-QPS systems. 1,024+ = maximum precision for complex domains. Reducing 1,536 → 768 dims cuts costs 50% with <2% recall loss.

- Dimension Reduction Saves 50% Costs With Minimal Accuracy Loss: PCA reduction from 1,536 → 768 dims reduces storage 50% and search latency 30-40% while maintaining <1-2% recall loss in most applications.

- Tiered Storage Reduces Costs 60-70% at Scale: Hot/warm/cold tiers (fast/medium/slow storage) enable cost optimization without sacrificing performance on recent data where it matters most.

- Re-Embedding at Model Upgrades Is Expensive: 1B vectors require weeks to re-embed. Production systems budget for periodic full re-indexing. Embedding conversion research may reduce this friction by 2026.

The Verdict: Vector Databases Are Now Essential Production Infrastructure

Vector databases transitioned from experimental (2023) to standard (2024) to essential (2025) infrastructure for AI applications. Organizations that built vector search capabilities before 2024 now have 12-18 months of competitive lead as others rush to catch up. The technology is mature, the ecosystem is competitive (driving prices down), and the ROI is proven. The remaining questions are operational: managed or self-hosted, which database, what embedding model, hand ow to optimize costs at scale. These are execution details. The strategic decision—whether to adopt vector database-powered semantic search—has effectively been made by the market. Non-adoption means accepting inferior search capabilities compared to competitors.

Related Articles

- AI Search vs Traditional SEO: The Skills You Need to Master in 2025

- Real-World AI ROI: Case Studies Showing 300–650% Returns Across Industries (With Exact Metrics

- Practical AI Applications Beyond ChatGPT: How Enterprises Actually Use Generative AI to Drive Revenue

- AI Hallucinations Explained: Why AI Confidently Generates False Information (And How to Fix It)

- E-E-A-T Signals in AI Content: How Google Quality Raters Actually Evaluate AI-Generated Pages

- From Lab to Production: AI Model Deployment Challenges and Real Solutions Companies Face

Comments (0)

No comments found